You are here: CSP Developer’s Guide: Internet Protocols > 2 VDAC-ONE Card > Jitter Buffer Delay/Adaptation Rate

Jitter Buffer Delay/Adaptation Rate

You can set the Jitter Buffer delay for each channel. Through the jitter buffer, you can tailor the delay for various IP network types, based on an application's sensitivity to delay and error.

You set the Jitter Buffer delay by configuring the following attributes with the Resource Attribute Configure message.

• Minimum Jitter Buffer Delay

• Maximum Jitter Buffer Delay

• Adaptation Rate

These parameters, especially the Minimum Jitter Buffer Delay, affect the overall packet error rate. In this context, the packet error rate is defined as the rate at which a particular active VDAC channel encounters an under-run condition. An under-run condition exists when the active VDAC channel attempts to obtain an Ethernet packet from the jitter buffer when no packet is available.

Based upon the jitter/network delay and the error packet rate, you can derive a ratio that describes the performance of the jitter buffer. The algorithm that the active VDAC channel uses to obtain packets from the jitter buffer is adaptive, taking into account the changing network latencies.

If you configure the adaptation rate for higher sensitivity, the active VDAC channel algorithm approaches the maximum jitter buffer delay for excessive network delays and maintains that delay. If you configure the adaptation rate for lower sensitivity, the jitter buffer delay increases more gradually.

The opposite is also true once the algorithm has adapted to its new jitter buffer delay. Based upon the sensitivity setting, it either maintains that delay for a longer period with a higher adaptation rate, or it corrects more quickly with a lower adaptation rate.

Select the minimal safe delay for the network to which the

VDAC-ONE card is connected. If the application can tolerate packet errors, use a lower adaptation rate and minimum jitter buffer delay. If the application cannot tolerate packet errors, use a higher adaptation rate and minimum packet delay.

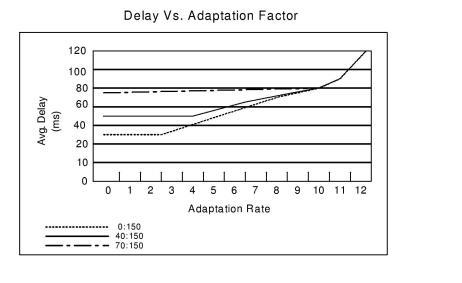

Effect of Adaptation Rate on Jitter Buffer Delay

The Effect of Adaptation Rates on Average Jitter Buffer Delay shows the effect of the adaptation rate on the average jitter buffer delay, for three different settings. The average delay increases with the higher sensitivity of the adaptation rate because, after network problems, the jitter buffer increases the delay and maintains the delay longer. This principle behavior remains the same for all networks, but due to differing latency times, the actual results vary across networks. With the jitter buffer set to 70 milliseconds minimal delay, the network shows a very slow increase in delay because the network has far fewer under-runs.

Figure 2-2 The Effect of Adaptation Rates on Average Jitter Buffer Delay

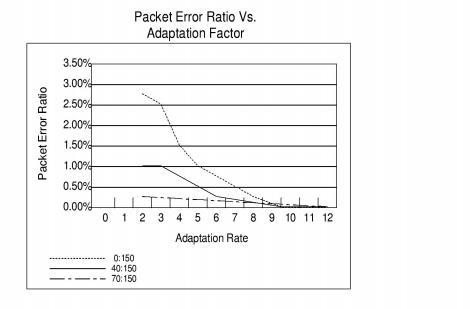

Packet Error Ratio vs. Adaptation Factor

When the jitter buffer delay setting is low, the adaptation factor significantly influences the error ratio, as shown in Packet Error Ratio vs. Adaptation Factor. As the minimum jitter buffer delay is increased, fewer packet errors are encountered. As the adaptation factor increases and the average network delay worsens, the number of packet errors decreases.

As the sensitivity of the adaptation factor increases and network delays are encountered, the adaptive algorithm combats packet errors by acquiring and maintaining a higher jitter buffer delay, approaching the maximum delay value.

Figure 2-3 Packet Error Ratio vs. Adaptation Factor

Jitter Buffer Delay Configuration

Through the jitter buffer, you can tailor the delay experienced for each channel for various Ethernet network types, based on an application's sensitivity to delay and error.

To configure the Jitter Buffer Delay, use the Resource Attribute Configure message to configure the following attributes:

• Minimum Jitter Buffer Delay

• Maximum Jitter Buffer Delay

• Adaptation Rate

You configure the Minimum Jitter Buffer Delay by using the Minimum Jitter Buffer Delay TLV in the Resource Attribute Configure message. The range of valid values is 0 - 150 milliseconds. The default is 75 milliseconds.

You cannot configure the Minimum Jitter Buffer Delay on an established connection.

You configure the Maximum Jitter Buffer Delay by using the Maximum Jitter Buffer Delay TLV in the Resource Attribute Configure message. The range of valid values is 150-300 milliseconds. The default is 150 milliseconds.

You cannot configure the Maximum Jitter Buffer Delay on an established connection.

You configure the Adaptation Rate by using the Adaptation Rate TLV in the Resource Attribute Configure message. The range of valid values is 0 - 12. The default rate is 7.

You cannot configure the Adaptation Rate on an established connection.